Tuberculosis (TB) has been around for at least 9000 years, and people have been trying to find a cure or treatment for hundreds of years, but it remains one of the deadliest infectious diseases in the world, killing more than 1.5 million people per year. Despite all of the motivation and effort, there has been only partial progress in fully eradicating the disease but a company in South Korea, Standigm, is utilizing its artificial intelligence platform and deep learning prediction model in collaboration with Institut Pasteur Korea’s drug discovery platform to design and synthesize new compounds to treat tuberculosis. The results are then provided back to Standigm’s artificial intelligence (AI) system to enhance the learning model and the collaboration has identified lead compounds for resistant tuberculosis.

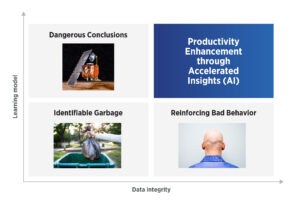

Artificial intelligence is moving faster than any other technology or tool we’ve previously encountered – it took ChatGPT only two months to reach 100 million active monthly users. But with great speed and incredible power also comes the opportunity for errors. Ensuring systems and guardrails are in place to identify inaccurate results is imperative to maximizing the benefits of artificial intelligence and machine learning. There are really two vectors to be concerned with – data and the learning model.

Garbage In, Garbage Out

It’s easy to see that bad data will result in bad results but there are nuances. As you may know, ChatGPT stopped using data beyond September of 2021, which can obviously result in some misleading information. Ask ChatGPT whether Lionel Messi is good enough to win a World Cup and the system responds with information about his talent and the importance of team play. The algorithm emphasizes how close he has come, not knowing that in fact Messi won the World Cup in 2022.

But more than just the raw data, the context also matters. Simple oversights with elements like units of measurement can have catastrophic consequences. The $125 million NASA Mars Climate Orbiter launched in December of 1998 on a mission to investigate Mars’ atmosphere, climate and surface. Due to reach its operational orbit in December of 1999, on Sept. 23, 1999, the orbiter began its Mars orbit insertion burn as planned. After passing behind Mars, the orbiter was supposed to re-establish contact with Earth but never did. The failure resulted from commands from Earth being sent in English units (pound-seconds) without being converted into the metric standard (Newton-seconds), causing the orbiter to miss its intended orbit, ultimately falling into the Martian atmosphere and disintegrating.

Not only is data critical for good outcomes, but so are the learning models. During COVID one EU country decided to live stream all football games, allowing the stadium to remain empty of fans. They even eliminated the need for camera personnel by using an AI algorithm. The AI had been trained to follow the ball on the screen to capture the action but as you can see in the video above, the model used for the AI algorithm is just as critical as the data itself. In this case the AI had been trained to follow a football, but the training wasn’t good enough to differentiate between the ball and the bald head of the referee on the pitch, causing the camera to follow the bald instead of the ball. While this example wasn’t catastrophic (save the super fans), you can easily imagine how in a different situation an improperly trained model could be.

Capturing the True Value of AI

As we’ve seen, both high data integrity and learning models that represent your system properly are required for success. The reality is we’re not yet at a place where we can substitute artificial intelligence for our own judgement – we need to think of AI as assistive not in place of so let’s consider another abbreviation for AI – accelerated insights – a system with high data integrity and a valid model that enables you to arrive at learnings that you can have a high level of confidence in, faster. Such a system requires data that:

- Is useful to predicting the desired learnings

- Has the proper context

- Is structured in a way the system can fully utilize it

- Is secure – has not been altered or manipulated

And a learning model that:

- Has a clear objective

- Utilizes the correct type of model

- Has the correct techniques applied

- Includes a useful data visualization system

- Has a method for applying learnings

- Can apply learnings in real-time when required

Accelerated Insights: Real-World Examples

The automotive industry has always held the highest standards for component quality and lack of test escapes. A common technique is looking for outliers with device part average testing (DPAT) to reduce test escapes by finding devices that are outside the normal distribution. The distribution of a given test across many devices and wafers is wider than the expected variation if you utilize other information about the specific device you are testing. The actual expected IDDQ result is a function of the specifics of the device. An AI model can be used to generate an expected value that is derived from other device-specific measurements to narrow the range for the device being tested, which can then be compared to the measured IDDQ. The results reveals that some of the parts that fall within the broad distribution of all parts are no longer performing as expected and would likely fail in the field. With the quality demands of markets like automotive, such solution provide a means to achieving accelerated insights.

Another rapidly expanding area is assistive intelligence for code generation. Rather than building a test program from scratch for each device, the more powerful method is to combine a device-specific test plan with a set of code libraries. This can be done using an AI program generator but it still needs engineering oversight. By utilizing AI, the engineer can focus on the most important aspect of the process – the review of the program, bringing the expertise that only a test engineer can offer and significantly improving efficiency. However, you can go further and allow parameters such as test time and test repeatability to feed back to the AI engine to improve the libraries, with the ultimate goal being to use the real-world quality data to optimize the overall outgoing quality, while minimizing the cost to achieve it.

AI Will Drive Productivity Gains for New Process Nodes

There’s a lot of talk in the news about how AI is going to take our jobs but for now it’s more likely that your job will change. In this era of AI, the importance of data is critical, and the likely outcome is that efforts will be focused on improving the quality and diversity of the data being collected during manufacturing to improve semiconductor processes.

One critical motivation is the management of costs associated with advanced process node development. A new 5nm design can cost in excess of $500 million, and the construction of a 5nm fab requires more than $5 billion. The complexity is increasing in these advanced nodes and unless we move the industry forward, it will be very difficult to justify these types of investments. The semiconductor industry has been able to continually move forward, overcoming technical challenges to allow higher performance at lower cost but we are at a point in which we need another breakthrough to ensure the industry keeps making progress. The productivity gains that can be achieved with AI are an essential element to making advanced process nodes economical and practical, and therefore good investments for both fabs and design companies but this is going to require every link in the value chain working together.

The results you can achieve with AI are absolutely real but the systems you develop will determine the extent of the true net benefits. If you fail to ensure high data integrity or have a learning model that doesn’t truly reflect the system you are influencing, the outcomes can be dangerous. But if you pay attention to the details, and continuously monitor what you ultimately care about – performance, cost, productivity, and more – the power of AI can be transformative.

Learn more about Teradyne’s analytics solution.

Regan Mills is the Vice President of Marketing, and General Manager of the SOC business unit for the Semiconductor Test division at Teradyne. Prior to Teradyne, Regan held management positions at Automation Engineering Incorporated and Arctic Sand Technologies. He holds a Bachelor of Science degree in electrical engineering and computer science from the Massachusetts Institute of Technology, and a Master of Science degree in electrical engineering, control systems, digital signal processing and analog design from Boston University.